Engineering Designer, the journal of the Institution of Engineering Designers has published (March/April 2006 issue) an article I wrote late last year introducing the ‘architectures of control’ concept simply to a design/engineering audience. It aims for as neutral a point of view as possible. (Readers may also be interested in a shorter article [PDF] published last year in the University of Cambridge’s Gown magazine).

As Engineering Designer doesn’t yet publish its content online, editor Mel Armstrong has allowed me to make the article available here – PDF [1.4 Mb] complete with images – or just the text (plus a diagram), after the jump.

From Engineering Designer, March/April 2006, published by the Deeson Group Ltd on behalf of the Institution of Engineering Designers.

Architectures of Control in Product Design

by Dan Lockton

‘User experience’ and interaction design are important parts of the development of most modern consumer products, and the aim generally is to enhance the user’s relationship with the product. Strengthening the user’s mental model of the product’s functions makes the user feel more confident and hence be more productive with the device, whatever it may be.

Nevertheless, there is a small–but increasing–trend towards explicitly attempting to constrain, restrict and lock down users’ behaviour through the way that the product is designed: ‘architectures of control’ [1]. At present, this thinking is most prevalent in the design of digital media products where technology developments make it easier to implement, but, as we shall see, there are numerous–if disparate–examples from across many design fields.

I have defined architectures of control in design as ‘features, structures or methods of operation designed into any planned system with which a user interacts, which are intended to enforce or restrict certain user behaviour.’

Examples

In the digital environment, the most common architectures of control are those related to digital rights management (DRM)–the Sony ‘rootkit’ débâcle of late 2005 [2] brought some of the issues involved to much greater public attention.

There are many forms of DRM, from mere copy-prevention algorithms (as found on DVDs) to ‘lockware’ which locks particular content (music, video, software or documents) to a particular manufacturer’s products, and even to a particular individual user. Most engineers are already familiar with these types of system–sometimes called technical protection measures (TPMs)–from the widespread use of ‘dongles’ (hardware locks) and node-locked licences in high-value CAD software, but the coming generations of ‘trusted’ computing products [3] have the potential to take the idea much further: computers which can monitor and report on any behaviour intended to circumvent DRM systems, or the use of unauthorised hardware (an analogy would be if your car could tell that you used, say, a replacement air filter from Halfords as opposed to one from the manufacturer, and reported this, invalidating your warranty).

Printer cartridges from some manufacturers already ‘feature’ automatic ‘expiry’ at a certain date, regardless of how much ink is left. Equally, some manufacturers have sought to prevent refilled cartridges from being usable, again using an embedded ‘handshake’ chip.

A number of architectures of control in electronic products go beyond DRM in attempting to plug the ‘analogue hole’–however effective digital copy-prevention mechanisms are, one can still capture the analogue signal, whether the quality is heavily degraded (e.g. using a camcorder to film in a cinema) or as good as the original (e.g. circumventing CD copy-prevention by recording the audio stream). Technology intended to detect certain types of content in images or video (e.g. trademarks or specified copyright works) and prevent it being recorded is already under development; Adobe Photoshop now includes a banknote detection algorithm which prevents scanned currency being saved or edited, and Hewlett-Packard patented a system for remotely degrading the quality of an image captured by a digital camera in 2004.

Backed up by legislation (the Digital Millennium Copyright Act in the US, and the EU Copyright Directive both criminalise the circumvention of copy-prevention measures, even for experimental purposes; the US’s proposed Digital Transition Content Security Act [4] would have even more implications for designers, as we will see later), the use of architectures of control in digital products and systems is gaining momentum.

Outside of the digital realm, it is possible to characterise as architectures of control many of the ‘forcing functions’ employed by engineers and product designers. Donald Norman describes forcing functions as ensuring that “actions are constrained so that failure at one stage prevents the next step from happening” [5]–in practice this includes safety interlocks–e.g. the car seat belt-ignition interlock championed by Lee Iacocca in the 1970s.

Forcing functions can be implemented electronically or much more simply through the layout of the product. A public telephone which requires the handset to be lifted before inserting a payment card could simply have the card slot covered by the handset until it is lifted [6]; one of the most ubiquitous examples is the long earth pin on UK electrical plugs, which upon insertion pushes away the sprung guard covering the live and neutral terminals. A number of techniques used in mistake-proofing during manufacturing or assembly (poka-yoke) would also fall into this category.

Designers working with architects and town planners are also increasingly embedding architectures of control into the built environment, from the ‘brute force’ approach of speed humps, chicanes and kerbs with bolts in them (to prevent skateboarders using them), to the more subtle, such as café or bus stop seating intended to be just uncomfortable enough to prevent people lingering for too long. A US company, Belson, produces an attractive park bench with a central armrest intended specifically to prevent members of the public sleeping on it [7].

Common themes: intentions

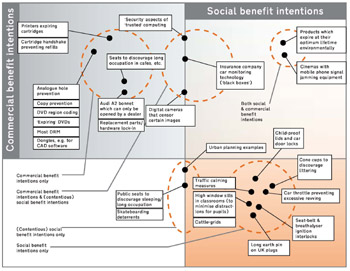

Reviewing the above (very brief) look at examples from different fields, there are two main strategic motivators behind architectures of control: intended commercial benefit and (sometimes contentious) intended public, or ‘social’ benefit, which are by no means mutually exclusive. In some cases the two intentions can coincide–for example, a cinema chain which uses a Faraday cage or other signal jamming device to prevent mobile phone reception both improves the experience for the majority of its customers, and (potentially) acts in its own interests commercially, even if only through the publicity it will receive for announcing such a measure.

The above diagram (click to enlarge) attempts to position some of the architectures of control mentioned in this article (together with a few other examples) in a plane representing intended commercial and social benefits. The exercise is extremely subjective and I ask for readers’ appreciation of this; whilst the commercial benefits of any particular design feature could possibly be quantified financially, the issue of social benefit is simply too complex.

The concept of ‘products which expire at their optimum lifetime environmentally’ (based on life cycle analysis) is an interesting one, combining commercial and social (environmental) benefit intentions. Whilst there is insufficient scope within this article to go into the ideas in this area, I have a brief discussion of the concept available on the Internet [8].

Implications for designers

The decision to use of ‘architectures of control’ in a particular product may come from the design or engineering team itself, or as part of the specification from management. For example, there has been much debate over whether DRM–now a core part of many media companies’ business models–actually has any net commercial benefit. Whilst it is often presented as a ‘technology push’ phenomenon (“we now have technology that allows us to do this, so we should find an application for it”), it has been suggested by some commentators that DRM’s efficacy is irrelevant as long as it is perceived to be efficacious by the investors who want to see it as part of the business model (i.e. it is to some extent driven by “market pull”).

In some cases, increasing corporate demand for architectures of control in products may spur development of new products, particularly if aided by legislation. For example, the US’s proposed (at time of writing) Digital Transition Content Security Act [4] would require a new generation of digital video recorders to be designed with a number of specific features, such as automatically deleting ‘protected’ content after 90 minutes.

There is also an opportunity for designers in companies taking the opposite stance: Chris Weightman, of London design consultancy Tangerine, believes that outside of the companies that have gone strategically down the restriction route, designers will still tend to focus on making the product experience more attractive to the user. This works against architectures of control: indeed, there may be a commercial advantage to being ‘second’ in the market (a ‘me-too’ product) but offering a less restricted product:

“The only distinctive selling point of some companies’ products–particularly in the portable music player market–is that they allow the user to get round the restrictive architecture of the market leaders. If design can build on that distinctiveness by making the product appealing in other ways as well, then second place could well become first place.”

It is worth remembering that we are all consumers as well as engineers and designers, and so we are all bound to feel frustration and probably much inconvenience at certain more restrictive architectures of control as they become more common. In some cases, established consumer rights may be affected–you wouldn’t expect to have to buy your whole music collection over again if your CD player breaks, but in some markets (e.g. the Japanese chaku-uta mobile phone ringtones), this is already the case, thanks to very restrictive DRM.

Equally, the ‘freedom to tinker’ [9] with machines and products–which has probably inspired so many engineers to go into the profession–may be increasingly threatened. For example, the Audi A2 bonnet (as mentioned on the diagram), which is designed not to be opened by the car’s owner, but only by an authorised dealer, might be the thin end of a wedge. Aside from mere irritation, such practices may have a considerable long-term impact on innovation, which often depends at least to some extent on reverse-engineering of existing technology to understand it better.

Overall, architectures of control in design are a trend which it is worth considering, and whilst I recognise the intended commercial and social benefits of many such implementations, I feel that the phenomenon deserves a certain amount of scrutiny from those in the technology community.

More information

For more information on the ideas and examples in this article, please visit the ‘Architectures of Control in Design’ website and weblog at http://www.danlockton.co.uk/architectures – the site is frequently updated with new examples of architectures of control and comments from readers. If you have any questions or suggestions, please don’t hesitate to get in touch: dan@danlockton.co.uk

Dan Lockton GradIED is a freelance design engineer and writer, formerly an engineer for Sinclair/Daka Research and a designer for Mayhem UK. He studied Industrial Design Engineering at Brunel, and in 2005 completed a Cambridge-MIT Institute Master’s in Technology Policy at the University of Cambridge; Dan’s book Rebel Without Applause on the Reliant Motor Company was reviewed by Barry Dagger in Engineering Designer, October 2003.

[1] The term ‘architectures of control’ was popularised by Lawrence Lessig, founder of Stanford Law School’s Center for Internet & Society–with specific reference to the way that the architecture of the Internet regulates how it can be used–in his Code, and Other Laws of Cyberspace, Basic Books, New York, 1999

[2] See, e.g. ‘Sony’s long-term rootkit woes,’ BBC News website, 21.11.2005, http://news.bbc.co.uk/2/hi/technology/4456970.stm

[3] Anderson, R., ‘Trusted’ Computing Frequently Asked Questions, http://www.cl.cam.ac.uk/~rja14/tcpa-faq.html

[4] See, e.g. ‘A lump of coal for consumers: analog hole bill introduced,’ Electronic Frontier Foundation, 16.12.2005, http://www.eff.org/deeplinks/archives/004261.php

[5] Norman, D. The Design of Everyday Things, Basic Books, New York, 2002, pp.132-140

[6] I am grateful to Steve Portigal (http://www.portigal.com ) for this example.

[7] ‘Recycled Plastic Georgetown Bench,’ Belson Outdoors, Inc., http://www.belson.com/gbrec.htm

[8] See ‘Case study: Optimum Lifetime Products,’ http://architectures.danlockton.co.uk/?page_id=19

[9] See http://www.freedom-to-tinker.com

Pingback: Architectures of Control in Design » Philips: You MUST watch these adverts